- 2 Posts

- 55 Comments

11·3 months ago

11·3 months agoThat’s a great line of thought. Take an algorithm of “simulate a human brain”. Obviously that would break the paper’s argument, so you’d have to find why it doesn’t apply here to take the paper’s claims at face value.

22·3 months ago

22·3 months agoThere’s a number of major flaws with it:

- Assume the paper is completely true. It’s just proved the algorithmic complexity of it, but so what? What if the general case is NP-hard, but not in the case that we care about? That’s been true for other problems, why not this one?

- It proves something in a model. So what? Prove that the result applies to the real world

- Replace “human-like” with something trivial like “tree-like”. The paper then proves that we’ll never achieve tree-like intelligence?

IMO there’s also flaws in the argument itself, but those are more relevant

63·3 months ago

63·3 months agoThis is a silly argument:

[…] But even if we give the AGI-engineer every advantage, every benefit of the doubt, there is no conceivable method of achieving what big tech companies promise.’

That’s because cognition, or the ability to observe, learn and gain new insight, is incredibly hard to replicate through AI on the scale that it occurs in the human brain. ‘If you have a conversation with someone, you might recall something you said fifteen minutes before. Or a year before. Or that someone else explained to you half your life ago. Any such knowledge might be crucial to advancing the conversation you’re having. People do that seamlessly’, explains van Rooij.

‘There will never be enough computing power to create AGI using machine learning that can do the same, because we’d run out of natural resources long before we’d even get close,’ Olivia Guest adds.

That’s as shortsighted as the “I think there is a world market for maybe five computers” quote, or the worry that NYC would be buried under mountains of horse poop before cars were invented. Maybe transformers aren’t the path to AGI, but there’s no reason to think we can’t achieve it in general unless you’re religious.

EDIT: From the paper:

The remainder of this paper will be an argument in ‘two acts’. In ACT 1: Releasing the Grip, we present a formalisation of the currently dominant approach to AI-as-engineering that claims that AGI is both inevitable and around the corner. We do this by introducing a thought experiment in which a fictive AI engineer, Dr. Ingenia, tries to construct an AGI under ideal conditions. For instance, Dr. Ingenia has perfect data, sampled from the true distribution, and they also have access to any conceivable ML method—including presently popular ‘deep learning’ based on artificial neural networks (ANNs) and any possible future methods—to train an algorithm (“an AI”). We then present a formal proof that the problem that Dr. Ingenia sets out to solve is intractable (formally, NP-hard; i.e. possible in principle but provably infeasible; see Section “Ingenia Theorem”). We also unpack how and why our proof is reconcilable with the apparent success of AI-as-engineering and show that the approach is a theoretical dead-end for cognitive science. In “ACT 2: Reclaiming the AI Vertex”, we explain how the original enthusiasm for using computers to understand the mind reflected many genuine benefits of AI for cognitive science, but also a fatal mistake. We conclude with ways in which ‘AI’ can be reclaimed for theory-building in cognitive science without falling into historical and present-day traps.

That’s a silly argument. It sets up a strawman and knocks it down. Just because you create a model and prove something in it, doesn’t mean it has any relationship to the real world.

For a direct replacement, you might want to consider enums, for something like

enum Strategy { Foo, Bar, }That’s going to be a lot more ergonomic than shuffling trait objects around, you can do stuff like:

fn execute(strategy: Strategy) { match strategy { Strategy::Foo => { ... } Strategy::Bar => { ... } }If you have known set of strategy that isn’t extensible, enums are good. If you want the ability for third party code to add new strategies, the boxed trait object approach works. Consider also the simplest approach of just having functions like this:

fn execute_foo() { ... } fn execute_bar() { ... }Sometimes, Rust encourages not trying to be too clever, like having

getvsget_mutand not trying to abstract over the mutability.

Not sure if this my app or a Lemmy setting, but I only see it once, because it’s properly cross-posted

I couldn’t view this with Firefox or Gnome. ImageMagick to the rescue, though:

convert https://pub-be81109990da4727bc7cd35aa531e6b2.r2.dev/weofihweiof.jpg meme.jpg

86·1 year ago

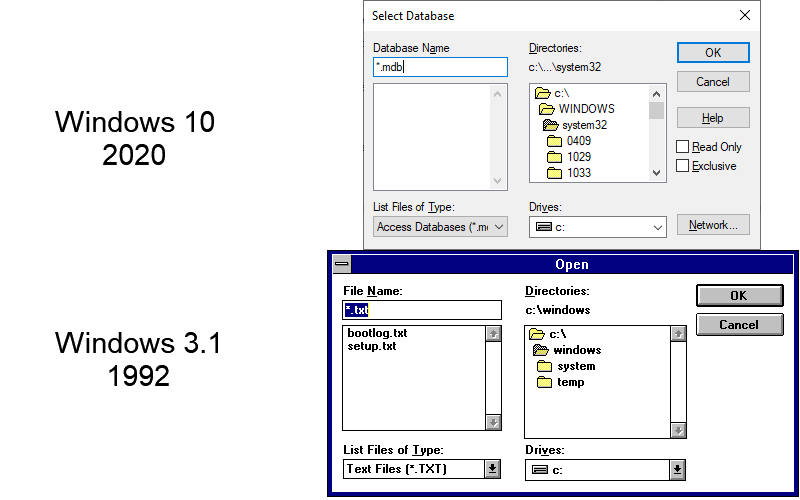

86·1 year agoThere’s some even older UI bits buried around in there:

29·1 year ago

29·1 year agoMan what a clusterfuck. Things still don’t really add up based on public info. I’m sure this will be the end of any real attempts at safeguards, but with the board acting the way it did, I don’t know that there would’ve been even without him returning. You know the board fucked up hard when some SV tech bro looks like the good guy.

1·1 year ago

1·1 year agoBut personally I’ll continue to advocate for technology which empowers people and culture, and not the other way around.

You won’t achieve this goal by aiding the gatekeepers. Stop helping them by trying to misapply copyright.

Any experienced programmer knows that GPL code is still subject to copyright […]

GPL is a clever hack of a bad system. It would be better if copyright didn’t exist, and I say that as someone that writes AGPL code.

I think you misunderstood what I meant. We should drop copyright, and pass a new law where if you use a model, or contribute to one, or a model is used against you, that model must be made available to you. Similar in spirit to the GPL, but not a reliant on an outdated system.

This would catch so many more use cases than trying to cram copyright where it doesn’t apply. No more:

- Handful of already-rich companies building an AI moat that keeps newcomers out

- Credit agencies assigning you a black box score that affects your entire life

- Minorities being denied bail because of a black box model

- Being put on a no-fly list with no way to know that you’re on it or why

- Facebook experimenting on you to see if they can make you sad without your knowledge

3·1 year ago

3·1 year agoWhy should they? Copyright is an artificial restriction in the first place, that exists “To promote the Progress of Science and useful Arts” (in the US, but that’s where most companies are based). Why should we allow further legal restrictions that might strangle the progress of science and the useful arts?

What many people here want is for AI to help as many people as possible instead of just making some rich fucks richer. If we try to jam copyright into this, the rich fucks will just use it to build a moat and keep out the competition. What you should be advocating for instead is something like a mandatory GPL-style license, where anybody who uses the model or contributed training data to it has the right to a copy of it that they can run themselves. That would ensure that generative AI is democratizing. It also works for many different issues, such as biased models keeping minorities in jail longer.

tl;dr: Advocate for open models, not copyright

4·1 year ago

4·1 year agoI wouldn’t be concerned about that, the mathematical models make assumptions that don’t hold in the real world. There’s still plenty of guidance in the loop from things such as humans up/downvoting, and people generating several to many pictures before selecting the best one to post. There’s also as you say lots of places with strong human curation, such as wikipedia or official documentation for various tools. There’s also the option of running better models as the tech progresses against old datasets.

119·1 year ago

119·1 year agoThe rules I’ve seen proposed would kill off innovation, and allow other countries to leapfrog whatever countries tried to implement them.

What rules do you think should be put in place?

2125·1 year ago

2125·1 year agoGood to hear that people won’t be able to weaponize the legal system into holding back progress

EDIT, tl;dr from below: Advocate for open models, not copyright. It’s the wrong tool for this job

7·1 year ago

7·1 year agoKind of looks like an alternative universe where Rust really leaned into its initial Ruby influences. IMO the most interesting thing was kicked down the road, I’d like to see more of the plan for concurrency. Go’s concurrency (which it says they’re thinking of) kind of sucks for lots of things, like “do these tasks in parallel and give me the return values”. Go can do it with channels and all that, but Rayon’s

par_iter()just magically makes it all work nicely.

You should believe it as much as you want. I don’t have any inside knowledge myself, I just remembered an HN comment that was relevant to this post and linked it.

142·1 year ago

142·1 year agoApparently there’s an effort underway. I don’t have any more context than this:

https://news.ycombinator.com/item?id=38020117

I will say that I actually like the flat namespace, but don’t have a strong opinion

I understand you’re upset, but my sources have been quite clear and straightforward. You should actually read them, they’re quite nicely written.

So you admit that you were wrong?

From what I understand, Ada does not have an equivalent to Rust’s borrow checker. There’s efforts to replicate that for Ada, but it’s not there yet.