Thanks for giving it a good read through! If you’re getting on nvme ssds, you may find some of your problems just go away. The difference could be insane.

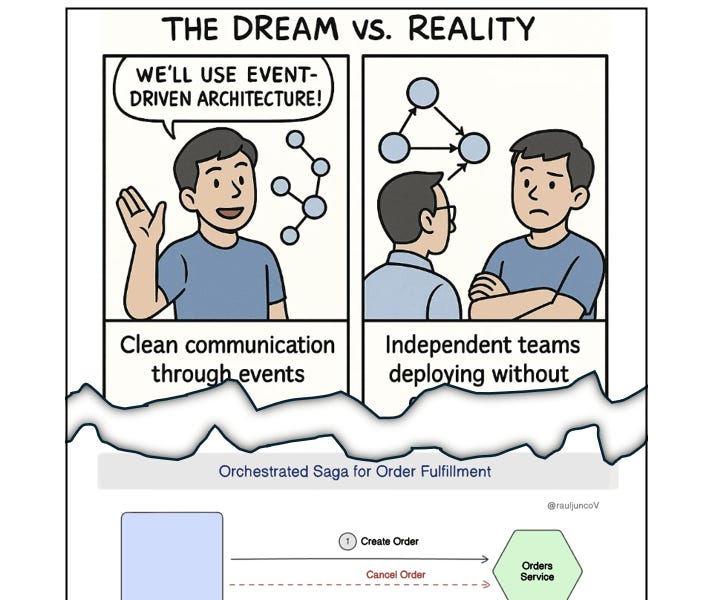

I was reading something recently about databases or disk layouts that were meant for business applications vs ones meant for reporting and one difference was that on disk they were either laid out by row vs by column.

I’m not entirely sure. I spent more than a year in Latin America and came back to prices being about 2-3x what i remember. Groceries before I left were 2x compared to before COVID.

Shits fucking expensive in the US.